Background

Although numerous studies have shown the potential of artificial intelligence (AI) systems in drastically improving clinical practice, there are concerns that these AI systems could replicate existing biases.

Discussion

AI relies on data generated, collected, recorded and labelled by humans. If AI systems remain unchecked, whatever biases that exist in the real world that are embedded in data will be incorporated into the AI algorithms. Algorithmic bias can be considered as an extension, if not a new manifestation, of existing social biases, understood as negative attitudes towards or the discriminatory treatment of some groups. In medicine, algorithmic bias can compromise patient safety and risks perpetuating disparities in care and outcome. Thus, clinicians should consider the risk of bias when deploying AI-enabled tools in their practice.

Artificial Intelligence (AI) has the potential to revolutionise clinical medicine, particularly in image-based diagnosis and screening.1 Although AI applications are generally limited to augmenting clinician skills or helping with certain clinical tasks, full automation may be possible in the near future.2 A more sophisticated set of AI approaches, called deep learning, has found success in detection tasks (eg determining the presence or absence of signs of a disease) and classification tasks (eg classifying cancer type or stage).3 Lin et al4 offer a summary of the ways in which AI will transform primary care, which include, among others, AI-enabled tools for automated symptom checking, risk-adjusted panelling and resourcing based on the complexity of the medical condition, as well as the automated generation of clinical notes based on clinician–patient conversations.

However, there are concerns that the benefits of AI in improving clinical practice may be hampered by the risk of AI replicating biases. A growing number of cases across industries show that some AI systems reproduce problematic social beliefs and practices that lead to unequal or discriminatory treatment of individuals or groups.5 This phenomenon is referred to as ‘algorithmic bias’, which occurs when the outputs of an algorithm benefit or disadvantage certain individuals or groups more than others without a justified reason for such unequal impacts.6

Aim

This paper aims to provide a brief overview of the concept of algorithmic bias and how it may play out in the context of clinical decision making in general practice.

Understanding bias in AI

The first step in understanding algorithmic bias is dispelling the myth that technologies, especially AI enabled and data driven, are free of human values. AI relies on data generated, collected, recorded and labelled by humans. If AI systems remain unchecked, whatever biases exist in the real world that are embedded in the data will be incorporated into the AI algorithms.5

Cycle of biases

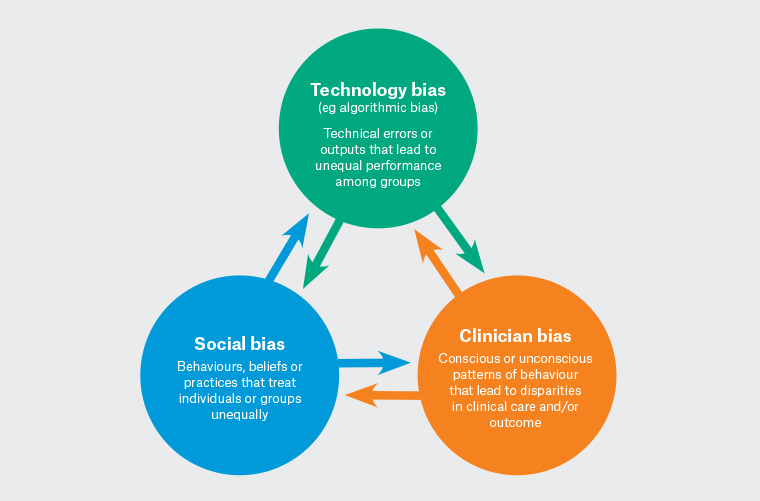

Algorithmic bias can be considered as an extension, if not a new manifestation, of a pernicious cycle of biases that include social, technological and clinician biases (Figure 1). Social bias refers to behaviours, beliefs or practices that treat individuals or groups unequally in an unjust manner. Social biases can manifest as prejudice, understood as negative attitudes or false generalisations felt or expressed towards an individual or group based on characteristics, such as race or ethnicity, sex or gender and socioeconomic status.7 Social biases can also manifest as discrimination, which refers to practices that deny individuals or groups equality of treatment, usually involving actions that directly harm or disadvantage the target individual or group.7

Figure 1. Cycle of biases.

Clinician bias refers to a set of cognitive tendencies of clinicians to make decisions based on incomplete information or subjective factors, or out of force of habit.8 Yuen et al8 describe common cognitive biases, including ‘availability bias’ and ‘confirmation bias’. The former refers to making decisions based on what is immediately familiar to the clinician, whereas the latter refers to assigning unjustified preference to findings only because they confirm a diagnosis. Such cognitive biases could amplify health inequities resulting from broad social prejudices against marginalised groups. As with any member of society, clinicians are susceptible to culturally pervasive prejudicial beliefs that can manifest as implicit bias, or the unconscious association of negative attributes to an individual or a group.9 In Australia, implicit bias may explain Aboriginal and Torres Strait Islander people’s (hereafter respectfully referred to as Aboriginal people) experience of health inequities, particularly for chronic conditions. Evidence shows that implicit bias among practitioners tends to underestimate Aboriginal people’s experience of pain, leading to less comprehensive assessment and subsequently delays in treatment because of mismanagement.10

Medical technologies are said to be biased when errors or outputs systematically lead to unequal performance among groups.11 Studies have shown that some physical attributes or mechanisms of medical devices are biased against certain demographics.11 For example, pulse oximeters that use light to measure blood oxygenation have been shown to be less accurate for people with darker skin tones.12 The authors of a retrospective cohort study that examined pulse oximetry sensitivity among Black, Hispanic, Asian and White patients based on data collected from 324 centres in the US argue that ‘systematic underdiagnosis of hypoxemia in Black patients is likely attributed to technical design issues, but the decision to tolerate the miscalibration for Black patients has been collective despite the available evidence’.13 These findings show that unequal performance of medical devices, such as pulse oximeters, risks exacerbating health inequities that negatively impact the already marginalised groups.

From old to new

AI algorithms risk perpetuating existing social, technological and clinician biases by the continued use of datasets that do not represent real-world populations. Marginalised groups based on race, sex and sexuality have a long history of being absent or misrepresented in datasets,14 which are typically coming from electronic health records or social surveys. AI algorithms based on non-representative datasets may perform accurate prediction, classification or pattern recognition specific to the majority groups they are trained with, but tend to have performance issues in recognising patterns outside the majority groups.14 Evidence of algorithmic bias has been demonstrated in algorithms used to identify dermatological lesions that are often trained with images of lesions from White patients.15 When tested on patients with a darker skin colour, the accuracy of the algorithms is 50% lower than what the developers claimed.15 Other examples demonstrate sex-based disparities, such as the algorithms used for predicting cardiac events that are trained predominantly using datasets from male patients.16

In addition to replicating real-world bias through non-representative datasets, AI algorithms that are initially established as ‘fair’ may develop biases. These so-called latent biases, or biases ‘waiting to happen’, can occur in a number of ways.17 One way biased performance occurs is when an AI algorithm is trained using datasets in one location (eg a local hospital in a high-income city). The algorithm could be proven to perform fairly in that location, but may turn out to be biased when transferred to another hospital, city or country when the algorithm interacts with local data. Another way for biased performance to occur is the introduction by AI algorithms of ‘categorically new biases’, biases that do not just mimic social biases, but ‘perniciously reconfigure our social categories in ways that are not necessarily transparent to us, the users’.18

A more sophisticated set of approaches to AI called deep learning (eg artificial neural networks) have shown promise in extracting even more complex patterns of information from large datasets by using multiple processing layers.14 Some of the areas in which deep learning applications have shown some success are for the classification of melanoma,19 prediction of cardiovascular events20 and COVID-19 diagnosis.21 An increasing number of reports raise concerns that deep learning-based algorithms could amplify health disparities due to biases embedded in the training data. For example, a study by Larrazabal et al22 examined a model based on deep neural networks for computer-aided diagnosis of thoracic diseases using X-ray images. The findings of that study showed a consistent decrease in performance when using male patients for training and female patients for testing (and vice versa).22

Countering algorithmic bias

Regulatory bodies, including the US Food and Drug Administration23 and Australia’s Therapeutic Goods Administration,24 are in the process of establishing frameworks that specifically address the challenges raised by AI systems. Within the AI research and development community, experts are developing debiasing methods, which involve the measurement of biases followed by bias removal through a ‘neutralise and equalise approach’.25 Currently, however, the role of clinicians in minimising the risk (or impact, if unavoidable) of algorithmic bias remains an open question. One possible intervention in clinical practice is ensuring that clinicians continue to use their skills and judgment, including critical thinking and empathy, when using AI-enabled systems.26 Clinicians should continue to challenge the myth that AI systems are completely objective and bias free.

Currently, there are very few procedural mechanisms consisting of checklists or specific steps to counter algorithmic bias. One of these is the Algorithmic Bias Playbook developed by Obermeyer et al at the Center for Applied AI at the University of Chicago Booth.27 The playbook was intended for health managers (eg chief technical and chief medical officers), policymakers and regulators and includes a four-step process to guide the bias-auditing processes for health institutions.27 At present, however, it remains challenging to develop procedural tools for individual general practitioners with no background in data science or AI.

Conclusion

AI systems have shown great promise in improving clinical practice, particularly in clinical tasks that involve identifying patterns in large, heterogeneous datasets to classify a diagnosis or predict outcomes. However, there are concerns that AI systems can also exacerbate existing problems that lead to health disparities. There is growing evidence of algorithmic bias, whereby some AI systems perform poorly for already disadvantaged social groups. Algorithmic bias contributes to a persistent cycle that consists of social bias, technological bias and clinician bias. As with other types of biases in medicine, algorithmic bias has practical implications for general practice: it can compromise patient safety, lead to over- or underdiagnosis, delays in treatment and mismanagement. Thus, clinicians should consider the risk of bias when using or deploying AI-enabled tools in their practice.

Key points

- AI systems have the potential to greatly improve clinical practice, but they are not free of errors and biases because they are built on datasets that are generated, collected, recorded and labelled by humans.

- Algorithmic bias refers to the tendency of some AI systems to perform poorly for disadvantaged or marginalised groups.

- One cause of algorithmic bias is the use of datasets that are not representative of real-world populations.

- Clinicians should be aware of the risk of algorithmic bias and seek information about datasets and evidence of performance when deploying AI-enabled tools in their practice.